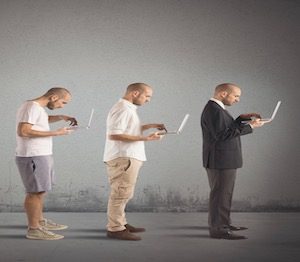

Understanding evolving roles and your present-day needs is key in staffing, training and buying for analytics and Big Data technology.

As organizations continue to take data more seriously and create dedicated teams around data initiatives, we see a plethora of “data practitioners”: Data Analysts, Data Engineers, Data Scientists, Citizen Data Scientists, Data Architects, DataOps Engineers – the list goes on.

With every new industry wave, confusing buzzwords and hyped-up renditions of old terms create confusion about what’s changed, and why. True, the responsibilities of a “data professional” are nebulous. Some are specialized, others cover a lot of ground under a title that sounds pretty specific. Understandably, organizations can get confused.

To take a deeper look at today’s different personas under the umbrella of data management and analytics, it’s important to understand how the world of data professionals has changed. Let’s do a quick review of the Three Eras of Analytics – and the rising personas of each.

Origins: The Legacy Era

In the 1990s, data warehousing and business intelligence communities formed a group of practitioners with deep technical expertise in developing data marts, star schemas, dimensional modeling, enterprise data warehouses, and ETL (Extract, Transform, Load). Report developers were another breed of data-experts, sitting in centralized IT teams and mastering their skills in various BI tools as well as SQL programming. We also saw individuals responsible for the stewardship of data.

Many enterprises ended up with teams that included Data Architects/Enterprise Architects, Model Designers, Data Warehouse Administrators, ETL Developers, BI Developers/Report Designers, and Data Stewards. In many ways, this approach was successful – for a while. It introduced a very organized methodology for designing data structures that could be centrally governed to enforce discipline, accuracy, and high quality.

Round Two: The New Age Era

Up to this point, line of business users were recipients of insights. Specifically, consumers of reports put together by centralized IT teams. However, in the 2000’s, as BI became critical to major business initiatives, roles like Business Analysts became more popular, thanks to self-service and advanced visualization tools such as Tableau and Qlik. BI also enabled another type of data practitioner – business operations managers (e.g. marketing, sales, supply chain ops, etc.) – to rely on more than just Excel spreadsheets to do analysis.

At the same time, the development of new sources of data such as clickstreams, introduced the use of NoSQL databases and Hadoop as vehicles to store data in a non-structured format. What was called a “schema on read” allowed stream and transaction data to be stored outside heavily structured data warehouses. And so emerged a new set of experts in the market around programming the data access from these non-traditional sources of data. And while data warehousing and ETL remained a core component of enterprise architecture, organizations began looking for developers with MapReduce, Pig, Scala, and later, Spark to support the coming-of-age for “Big Data”.

Both these movements shook the traditional, organized way of data disciples. Now data was handled by more individuals – especially outside traditional IT – who learned and practiced Big Data skills. In this new era, some data remained in the structured, organized, “schema-first, load-second” model. But a new paradigm emerged: the free- form model.

Addressing the Divide

This data evolution created yet another divide in the market. The first advanced visualizations wave was easier to catch. The second wave, Big Data , was not as easy. With little visualization variety available in existing BI tools and Excel, new tools such as Tableau quickly lowered barriers to entry for many analysts and business users. The Big Data wave was a more complex. The lack of market knowledge around Hadoop created the opportunity for early distributions vendors, such as Cloudera and Hortonworks, to create large revenue streams from their service and training offerings.

And because of the shortage of skills and the super-high demand of Big Data projects, Software Engineers such as Java/Phyton programmers, ETL Developers, and Data Warehouse Architects started adopting fancier titles such as Big Data Engineer, Hadoop Developer, and Hadoop Architect. As a result, data and analytic roles began falling into three camps: line of business users who were familiar with data visualization tools; BI professionals, which included those familiar with data warehouses and traditional BI tools; and Big Data developers.

See also: What Does a Data Scientist Really Do?

The massive amounts of data present on cheap storage also created a huge opportunity, most notably for artificial intelligence (AI). Now, larger volumes of data could be processed and developed into machine learning (ML) algorithms, as more enterprises decided to use ML and AI for business applications. The result? Another set of data professionals gained popularity: Data Scientists and, subsequently, their less technical, more business savvy counterparts – the Citizen Data Scientists. Possessing the sexiest job of the 21st century, these individuals boasted quantitative analytic backgrounds well-suited for creating, testing and validating data models. They did, however, require production-grade data preparation and data pipelines to bring their data science projects into life. These dynamics shifted the market for the third round.

Round Three: The Fusion Era

The divide between the old school of BI and data warehousing, the new momentum of data visualization, the rapid traction of Big Data technologies, data lakes, and the desire for AI-based applications – all combined to create the need for a holistic architecture and new breed of data professionals. These drivers also have created scenarios where data teams, data pipelines, and various data /analytic applications need to be fused together.

The mix of data practitioners on the business side, the ones that retained traditional IT roles, and those that sat on either camp but came equipped with Big Data or data science skills, made necessary both a common language and a shared platform for collaboration. So while fusing data sources is a must-have strategy for many enterprises, integrating traditional data pipelines that populate data warehouses with newer and richer data sets from clickstreams and IoT devices is not easy.

To avoid creating yet another centralized data warehouse to hold all data, a newer approach emerged. Self-service data prep satisfies the data integration and fusion requirements for both business and IT. Using data preparation, organizations could accommodate these two disciplines by creating the right balance between ease-of-use to satisfy business users with IT’s need for enterprise governance and scalability. Both were key to adoption. If the solution was easy to use but lacked automation, governance, and the ability to scale, it would never have the power to hold an enterprise together. Likewise, a solution with the bells and whistles of an enterprise platform but difficult to use would most certainly doom collaboration and adoption by business analyst.

Enter, the Data Engineer

So, to create harmony and integration among the point-solutions that had stacked up over two decades, another data role emerged: Data Engineer.

By definition, the Data Engineer is a central figure for both teams – the daily go-to person for anything data-related. A Data Engineer is typically from the ETL school or from a Hadoop and Big Data development background, but with business savvy and an acute sense of data use and value. This modern-day jack-of-all-trades complements every other role, as they operationalize the insights and data analysis created by business users into enterprise data assets. They can also take centralized data and provide exploration and preparation capabilities for business communities so they, too, can gain value.

Finally, the Data Engineer may take an even larger role, preparing and engineering data for the creation of new data products and services. Doing so makes them responsible for building a new revenue stream around their organization’s data.

Now: The Rising Star CDO

Today, creating a perfect data-driven team is an engaging subject. Yet some companies fail to realize the importance of appointing a person who can provide these teams with context, direction, and strategy. This is where Chief Data Officers (CDOs) come into the picture. With data becoming an integral business strategy, CDO is becoming a critical role in organizations. Perhaps that’s Gartner predicts by 2019, 90 percent of large organizations will have hired a Chief Data Officer. However, only 50 percent will succeed, due to a lack of focus on business-relevant outcome improvement.

Among many strategic responsibilities, CDOs are tasked with creating data literacy and widespread adoption of tools and techniques within their organization to ensure everyone is a better decision maker. They are responsible for enabling business strategies and innovations that maximize the value of data. They also have oversight for all the data professionals in the organization – either directly or indirectly – and should play a role when hiring new talent.

Beyond Hype: Why Data Understanding is the Most Important Criteria

Data has transformed the way organizations run their processes, along with the skills and technologies employed to drive better decisions. That is evident by the growing number of roles needed to support emerging technologies. Titles for these roles are not always accurate – with data professionals gravitating towards hyped-up notions of what the industry needs here and now. Yet there’s no denying that technology is becoming more accessible and intuitive to a wider group.

Data cleansing, preparing, modeling, and reporting is no longer the domain of IT and technical roles. Today, business users have a stake not only in what data is supposed to do, but also how it is supposed to work. The notion of self-service – started with building advanced visualizations – has now crept into data preparation exercises. As a result, it is accelerating data engineering and data science projects so businesses can advance faster.

There is much to consume and rationalize among new and legacy solutions. The first step: Build a solid understanding of your data before investing in new technology, people, and skills to complement your existing team.

Exploring the data you have, taking an inventory of data processes and techniques already in place, and understanding the time and resources needed to refresh your insights, source new data, make changes and initiate new projects will give you a clear idea of priorities. And what’s needed to keep up with the rising speed of the industry. Start by asking; what is the “time to value” of data in my organization? It slower or faster than we need? Answering this question truthfully is sometimes the hardest, but most critical step towards deciding on the tools, technologies, and data professionals that will make the biggest impact.