An important part of the process is to bring in people from across disciplines, even if they have conflicting perspectives.

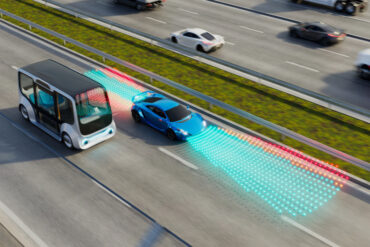

A few years back, experts and pundits alike were predicting the highways of the 2020s would be packed full of autonomous vehicles. One glance and it’s clear there are still, for better or worse, mainly human drivers out there on the roads, as driverless vehicles have hit many roadblocks. Their ability to make judgements in unforeseen events is still questionable, as is the ability of human riders to adapt and trust their robot drivers.

Autonomous vehicles are an example of a greater need for human-centered design, the theme of the recent Stanford Human-Centered Artificial Intelligence fall conference, in which experts urged more human involvement from the very start of AI development efforts. (Highlights of the conference provided by Stanford’s Shana Lynch.)

Speakers urged a new definition of human-centered AI – one that emphasizes the need for systems that improve human life and challenges problematic incentives that currently drive the creation of AI tools. Self-driving cars are an example of the need for more human factors designed into AI, said James Landay, vice director of Stanford HAI and host of the conference. “Designers must consider the needs and abilities of end users when deciding what appears on the console. They need to ensure the technology works with cyclists, pedestrians, and other non-drivers from the start. They must also talk to subject matter authorities, like transportation experts, to determine how these cars could affect the broader ecosystem.”

The design process in AI often misses a key element, conference speakers pointed out. “We do not know how to design AI systems to have a positive impact on humans,” Landay said. “There’s a better way to design AI.”

Another lesson learned was from the launch of Google Glass in 2014. At the time, its designers and promoters had visions of people going about their daily routines – shopping, attending events, and so forth – with details on the people and things they see popping up right in front of their eyes.

Google’s vision of the future in that regard has yet to pan out, explained Stanford professor Elizabeth Gerber. However, Google Glass has found widespread usage by workers in industrial settings, who require up-the-minute information on the equipment on which they are working. Even the most profound technology can fail, or be led down the wrong path, without accounting for human adoption. This experience needs to be applied to current work underway to develop artificial intelligence. Building “AI that people want is as important as making sure it works,” she said.

An important part of the process is to bring in people from across disciplines, even if they have conflicting perspectives, said Jodi Forlizzi, professor of computer science and associate dean for diversity, equity, and inclusion at Carnegie Mellon University. “To unpack issues like those revealed by the AI bartender, we need multidisciplinary teams made up of workers, managers, software designers, and others with conflicting perspectives, Forlizzi said. Experts from technology and AI, the social sciences and humanities, and domains such as medicine, law, and environmental science, are key. These experts must be true partners on a project from the start rather than added near the end.”

In addition, there needs to be a sense of what value AI can and should deliver. “We’re most often asking the question of what can these models do, but we really need to be asking what can people do with these models?” explained Saleema Amershi, senior principal research manager at Microsoft Research and co-chair of the company’s working group on human-AI interaction and collaboration. “We currently measure AI by optimizing for accuracy, explained Amershi. But accuracy is not the sole measure of value. “Designing for human-centered AI requires human-centered metrics,” she said.