As the demand for real-time insights and decision-making continues to grow, the ability to process and analyze data instantly is becoming a competitive differentiator for organizations across all industries.

In today’s fast-paced, data-driven world, the ability to process data in real time at scale has become a critical requirement for many mission-critical applications. In fact, real-time data processing is increasingly needed in areas including fraud prevention, hyper-personalization, autonomous systems, and the Internet of Things (IoT). Unfortunately, traditional data processing approaches are falling short and not able to deliver insights in the time frames needed in these application areas today.

Why the need for real-time processing?

Traditional data processing architectures typically involve collecting data at various points, transmitting it to a centralized location for analysis, and then making decisions based on the results. While this approach works for some use cases, it is often too slow for applications that require immediate insights and actions. The latency introduced by transmitting data to a central location for processing can result in missed opportunities, security vulnerabilities, and suboptimal performance.

One of the primary reasons for the growing demand for real-time data processing is the need to make decisions closer to where the data is generated. This shift is crucial in various applications where immediate decision-making is vital. For example:

- Fraud prevention in financial services: By processing transaction data at the edge, financial institutions can analyze patterns and anomalies in real time, allowing them to act swiftly to block suspicious activities.

- Hyper-personalization in eCommerce: By analyzing customer behavior and preferences instantly, retailers can tailor product recommendations, offers, and content to individual customers as they interact with their platforms.

- Autonomous systems: Autonomous vehicles, drones, and robots operate in environments where split-second decisions are crucial for safety and efficiency. These systems rely on real-time data from various sensors to navigate, avoid obstacles, and perform tasks autonomously. Any delay in processing this data can result in accidents or mission failures, making real-time processing at the edge a necessity.

- IoT applications: In industrial settings, IoT devices monitor equipment, track environmental conditions, and ensure operational efficiency. Real-time data processing is vital for identifying and addressing issues before they escalate, such as detecting equipment failures or optimizing energy usage.

What’s needed? Data immediacy and a real-time data platform

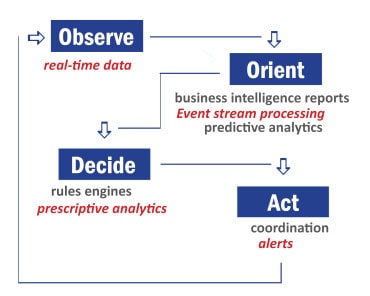

Stream processing is a key technique used in real-time data analysis. It involves the continuous processing of data streams, allowing for instant insights and decision-making. Data platforms that support stream processing can handle the ingestion, processing, and analysis of data in real time.

But more is needed. Specifically, there is a need to support data immediacy. Data immediacy refers to the availability and accessibility of data at the precise moment it is needed for decision-making.

Data immediacy goes beyond the idea of using streaming data alone. It includes historical and any other relevant data that needs to be considered along with the streaming data to make those split-second real-time decisions where even a latency of a couple of milliseconds can cost millions of dollars (in the case of fraud detection) or interrupt operations.

Going hand-in-hand with the need to support data immediacy, there is a need for a real-time data platform. Such a platform must ingest, process, analyze, and act upon data instantaneously —when it’s generated or received. With a real-time data platform in place, organizations can make immediate decisions and take timely actions based on the insights they uncover.

Such platforms often use technologies like streaming data processing, in-memory databases, and advanced analytics to handle large volumes of data at high speeds.

Pulling it all together

The benefits of real-time data processing, particularly for mission-critical applications, can be significant. By processing data instantly, organizations can:

- Enhance operational efficiency: Real-time insights allow organizations to optimize their operations, reduce downtime, and improve resource allocation. This is particularly important in industries like manufacturing, where even minor inefficiencies can lead to significant cost overruns.

- Improve customer experience: In sectors like eCommerce and financial services, real-time personalization and fraud detection can lead to better customer experiences, higher satisfaction, and increased loyalty.

- Enable autonomous operations: Autonomous systems, from self-driving cars to industrial robots, rely on real-time data to function safely and effectively. Real-time processing ensures these systems can make the right decisions at the right time.

- Reduce risks: In mission-critical environments, delays in data processing can lead to significant risks, including financial losses, safety hazards, and reputational damage. Real-time processing minimizes these risks by enabling faster and more informed decision-making.

A final word

As the demand for instant insights and decision-making continues to grow, the importance of data immediacy and real-time data processing at scale for mission-critical applications cannot be overstated. From fraud prevention and hyper-personalization to autonomous systems and IoT applications, the ability to process and analyze data instantly is becoming a competitive differentiator for organizations across industries.

To stay ahead, organizations must invest in the infrastructure, technologies, and expertise to implement real-time data processing at scale. By doing so, they can unlock new opportunities, mitigate risks, and deliver better outcomes for their customers and stakeholders.