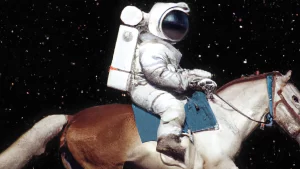

Generative AI systems that can create images from text prompts could be the first “killer app” for AI, but growing ethical concerns may slow down progress.

Artificial intelligence is at work in many different applications, from spam filters on Gmail to personalization settings on TikTok. However, for the most part, consumers have not been privy to these systems, which exist by and large in the background.

That may be changing, with the launch of several generative systems, primarily focused on images and videos. OpenAI’s DALL-E 2 opened the floodgates, with journalists and developers showing off the capabilities of this machine learning model to create new images through the use of text and supplemental images.

SEE ALSO: Self-Driving AI Model Bundles Perception and Control

The hype surrounding this has led to some speculating that generative systems may be the first “killer app” for AI. It is one of the first pure-play AI apps, in which the AI is front and center to the experience, rather than in the background.

OpenAI’s DALL-E 2 is the most talked about generative system, but there are others. Google’s Imagen has similar functionality, but both OpenAI and Google have been wary of making their algorithms open to all. Smaller startups are trying alternative models, such as Midjourney’s freemium service that is available to all. StabilityAI’s Stable Diffusion is a step further, as it has open-sourced all its software to allow creators and developers to work on their own images and models.

The risks associated with generative systems are only just starting to be explored, but for now, the concerns seem more economical than ethical. Artists are worried that the value of their art may be harmed by AI image creation studios, able to pump out millions of images at a fraction of the cost. Advocates argue that opening up image creation to the world could lead to enhanced creativity.

Of course, there are worries about misinformation and deep fakes becoming prominent with the use of AI image creator tools, which often cannot understand the context behind the text prompt and if there are any issues with it.

AI Video Systems – More Value, More Ethical Considerations

Along with image creators, Meta Platforms and Google have also launched AI video systems, which produce a video from a text prompt. Similar to DALL-E and other image creators, the machine learning model has been fed billions of videos and through years of labeling and contextual data, it is able to develop a video that matches the text prompt.

The value of this tool is even greater than the image creator, as video has become the most prominent format for entertainment, news, and media. TikTok is the king because it made an algorithm-feed for video, something Instagram is trying hard to copy with Reels. Media organizations could quickly develop a video instead of having to produce it themselves, and corporations wouldn’t have to have a studio involved in the process anymore.

With additional value inevitably comes more ethical dilemmas, and the issues with images spreading misinformation or deep fakes are amplified with video. Research has shown people are more susceptible to misinformation on video than text or image, and it may be even more difficult for AI developers to embed contextual awareness into these video editing tools to avoid the spread of misinformation.

Questions about whether Meta Platforms, Google, and the other tech giants are best suited to be handling the development of these models are also rife, as some accuse the platforms of already polluting social media and the internet with poorly developed artificial intelligence models, riddled with biases.

It does not appear that any government or institution is pushing for this AI to be banned, however some have called for the launch of these systems to be slowed, to avoid misuse. But it seems only a matter of time before these systems are available to all, and many of the ethical and commercial issues will have to be dealt with as users engage with the apps.