A majority of business managers regard in-memory technologies as critical to analytics success.

As we explore how real-time capabilities are driving new innovation and business horizons here at RTInsights, we also need to acknowledge the workhorse technology behind the scenes that is making a lot of this possible. The technology that has probably had the most profound effect on the real-time revolution is in-memory technology. While a number of speed-enhancing technologies have been coming to the fore – such as flash-based storage, and in-system caches – in-memory has the greatest potential.

Essentially, in-memory technology is the processing of data and code within the random access memory of computers. Previously, data has stayed on disks, and needed to be located and brought over to RAM as it was called by applications. When RAM was filled to capacity, data not in use was sent back to disk as new data was brought in. This disk-to-RAM transfer process is accomplished in milliseconds, of course, but when multiplied by the hundreds of gigabytes it begins to add up to a great deal of latency.

Such speed may not be obvious on a single PC running a spreadsheet, but picture an enterprise situation with hundreds of terabytes of data associated with an app, and it has to keep going back and forth between the disk arrays and CPU. That takes time. By taking out the mechanical-transfer of data processing, in-memory technology can speed up processing, often up to 2.000 times faster. With today’s clustered systems, all the data stored in a RAID disk array could theoretically be moved into machine memory.

A move to in-memory

Why are such speeds an issue? Companies have a variety of use cases where latency could derail an entire business model — such as energy production, or real-time recommendations for e-commerce — if not save a life (such as with monitoring ill patients).

A recent survey I helped design and publish, “Moving Data at the Speed of Business,” explores the adoption of approaches such as in-memory and cloud in delivering real-time capabilities. Among 303 data executives and professionals surveyed, 57 percent said that there is now strong demand for delivery of real-time information within their organizations. In addition, decision- making continues to be inhibited by incomplete and slow-moving information. Enterprises are weighed down by inadequate performance, siloed data, and slow response times. A new data architecture and new approaches to data integration are needed.

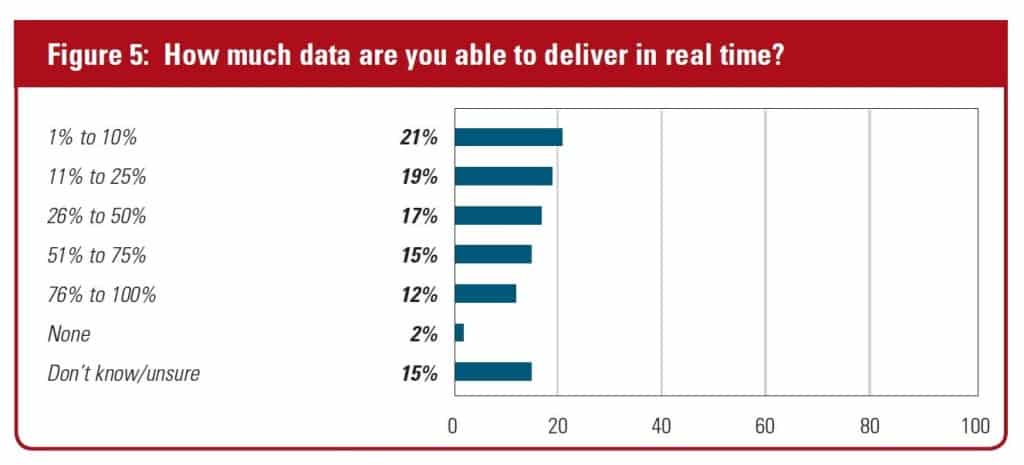

The move to in-memory technologies roughly corresponds to the explosion in uses for real-time data, including from transactions, ecommerce, social media, the IoT, and other sources. However, according to the survey, organizations do not have the correct technologies to act on this data:

Source: Independent Oracle Users Group report, produced by Unisphere Research and sponsored by Oracle.

The vast majority of businesses said their longest-running data query takes more than five minutes, with 31 percent saying it takes more than an hour (and in some cases, more than eight hours). More than half also reported that delays in required information inhibit decision making.

To address this, about 28 percent of organizations now employ in-memory technologies, and another 23 percent are piloting or evaluating the approach. In-memory is seen as having a vital role to play in the success of data-driven enterprises in the months and years ahead. A majority of managers and professionals, 52 percent, regard in-memory technologies as critical to their organization’s competitiveness.

In-memory databases

In-memory database users tend to be more advanced in their use of new approaches to data integration and delivery. A majority of in-memory users are able to move data at real-time speeds through their systems, versus about one-third of those evaluating or not interested in the technology.

There are a variety of vendors of in-memory database technologies, whether SAP HANA, Oracle TimesTen, IBM, VoltDB, Microsoft SQL Server, Redis, Pivotal Gemfire, and Teradata In-Memory Store. There also a number of vendors of in-memory data grids designed for operational intelligence, including ScaleOut Software, Gridgain, and Software AG. (This is not an exhaustive list, and vendors differ on a number of factors, including SQL support, use of column, row and key-value stores, and integration with open-source frameworks such as Apache Spark).

Nevertheless, in-memory technology promises to make real-time analytics a practical part of the business. “Companies are realizing that data analysis needs to produce results that are real time and drive automated, split-second business decision making for the best possible customer experience and customer retention,” Yiftach Shoolman, co-founder and CTO, Redis Labs, explains in a recent post.

The organizations that come out ahead will be those companies “that not only can master their data but do so at lightning speeds,” Shoolman states. “Growth drivers are going to be the reward for companies that are able to mine insights and catch the newest trends fastest.”